I have been part of some the best conversion optimization teams in the world, and they seem to have an intuitive sense on how to run the best experiments. People that are involved in these teams share a similar mindset.

I wanted to try to make this a process, one that could teach any organization how to run better experiments. I wanted to try to make this mindset more explicit in a way that is fun to use.

There’s no rocket science that follows, but this framework may well help your team drive a more efficient optimization culture.

Table of contents

- Introducing the Experiment Canvas: The Easiest Way to Test Your Ideas

- Why another canvas?

- Put facts at the core of your experiment

- Trigger your audience through a touchpoint

- Watch the desired behavior appear in the data

- Reverse Engineering a Piece of E-commerce History

- Selling More Chanterelles (or Any Other Variable Priced Good)

- Conclusion

Introducing the Experiment Canvas: The Easiest Way to Test Your Ideas

As cofounder of Science Rockstars, the company that created PersuasionAPI, and former employee at Booking.com, I am biased to use the science of persuasion to create experiments. Let me be very clear about that.

But after thousands of experiments, I also know that blindly applying the Cialdini principles will not cut it.

Most of the Cialdini principles are pretty straightforward to implement, and in many occasions they will deliver conversion improvement. But in many cases they also don’t.

That’s why I primarily focus on learning by experimentation instead. The main idea behind experimentation is to continuously learn as a company what works in your context, and what doesn’t.

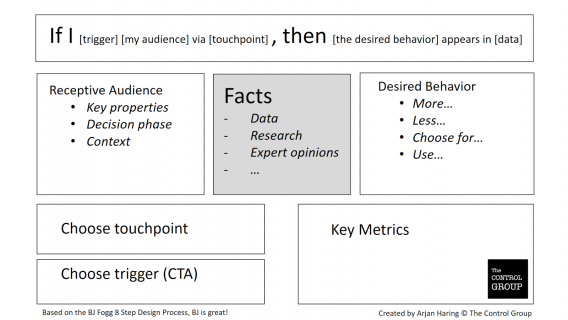

This is where the Experiment Canvas comes in. While training and consulting with companies I continuously test my approach how to make them better experimenters, this is my more recent edition of the experiment canvas that I would like to share with you.

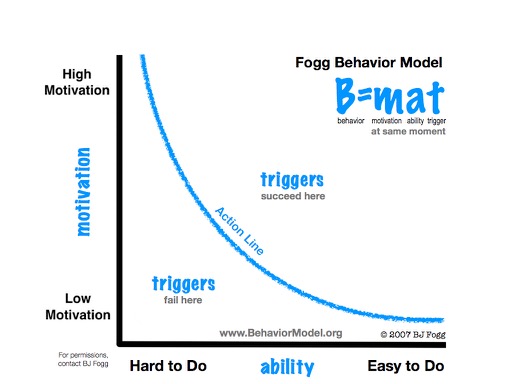

Two very practical approaches that I have used to create this canvas are the BJ Fogg 8 step Design Process and the Lean Startup method.

I adjusted the approaches to best fulfill the needs of companies today. This experiment canvas fits in a fact-based marketing mindset; the idea that marketing should be less driven by opinions and more driven by, well…facts.

Why another canvas?

To run effective and efficient experiments a common language is helpful. Next to that companies can more easily roll out experiments if they have an easy to understand and yet systematic approach. By following the 6 steps, people or teams can formulate a clear hypothesis that is ready to be tested:

- Facts

- Receptive Audience

- Touchpoint

- Trigger

- Desired Behavior

- Key Metrics

Put facts at the core of your experiment

By sticking to the facts you make it more likely that your marketing efforts will be effective. But how trustworthy would you rank the following “facts?”

- An expert’s opinion

- Outcomes of an user test

- Competitor Analysis

- Outcomes of an A/B test

- Scientific research

- NPS Score

- And so on…

Would you trust a conversion expert’s opinion more than outcomes of an previous AB test?

What do you actually measure with the NPS score and how does it compare to the insights you gain with user testing?

These are all subject to discussion, but the aim stays the same. You will always try to base the new marketing ideas you want to test on the most trustworthy facts. It’s a closer look at the validity and reliability of the research methods.

How valid is a NPS score for example?

The core questions behind NPS is “How likely is it that you would recommend our company/product/service to a friend or colleague?” and people have to score this on a 0 to 10 scale. The validity has to do whether the research answers the question you are after.

And personally I am not interested in what people say they will do, I rather stick to observational data of actual behavior.

Trigger your audience through a touchpoint

The science of persuasion (again BJ Fogg’s work is helpful here) informs us that you have the highest chance on conversion when a touchpoint is aligned within the existing customer journey of an audience that is already motivated to act.

Triggering the desired behavior by a call-to-action closes that loop and optimizes the chance of customers to act.

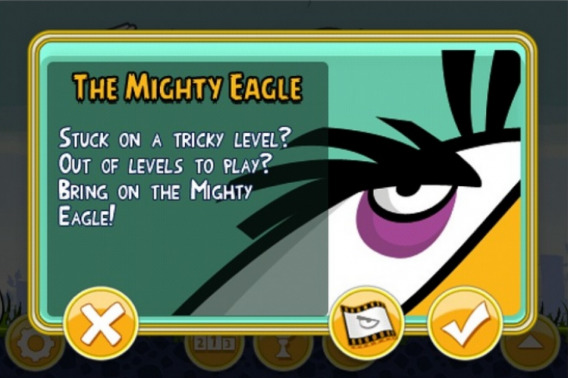

The most brilliant example of an effective trigger I have seen, one where the touchpoint is fitted perfectly in the customer journey of highly motivated people was spotted by the bright guys over at Buyerminds.

When you get stuck on a level in the famous Angry Bird game (you are highly motivated to finish the level), a pop up (touch point) will appear offering you a free pass to the next level by “bringing on the mighty eagle” (trigger – CTA).

Watch the desired behavior appear in the data

You probably want to convert your customers to do a lot of things:

- Sign up for the newsletter

- Give a review

- Put an additional product in the shopping cart

- Use your service every day

- Check out

- Don’t call your toll free customer service line

- Read the terms of agreements page

- Click on the pretty banners

- And so on…

It’s important that you clearly define what the desired behavior is, as well as how you are capturing the behavior.

Do you have access to the call center data and can you connect it to the people that are using the self service pages on your website?

What data will inform you that this actually is an additional product that was added to the shopping cart and not already on the initial wishlist for this specific customer?

If you have thought about all these things, it will be easy to fill in the following hypothesis:

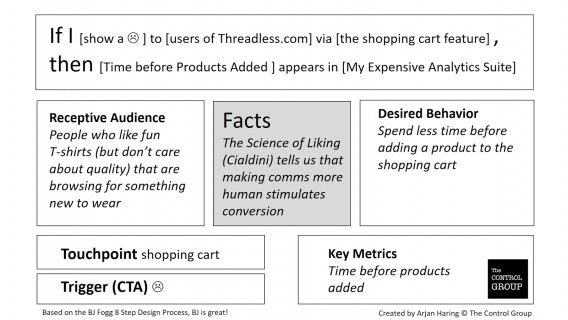

If I [trigger] [my audience] via [touchpoint], then [the desired behavior] appears in [data]

This hypothesis, and the filled in Experiment Canvas, is the start of your next experiment. You only need to translate that hypothesis to an actual piece of content or feature for your website.

Reverse Engineering a Piece of E-commerce History

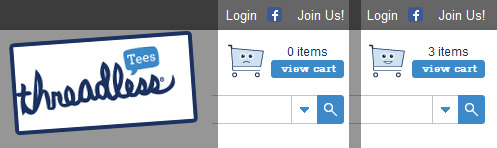

The quality of Threadless t-shirts is terrible, but their creative persuasion techniques were pretty damn awesome.

Back in the day (anno 2011) someone on their team came up with the idea to make the empty shopping cart sad. When you would add products to your cart its mood would change and it would become happy.

This kind of subtle user feedback gives me goose bumps. It truly touches my soul. But does this part of e-commerce history also hold up in an experiment? Better yet what was the whole idea behind this aesthetically pleasing piece of conversion magic?

To clarify the use of the Experiment Canvas I will deconstruct the Threadless bipolar shopping cart as if I was the one to come up with this idea and I wanted to create an experiment to see whether it would work.

“Good morning boss”. “What is it Arjan?” “Wouldn’t it be cool if our shopping cart would have a severe case of bipolar disorder?” “Can you leave me alone Arjan?” “But…”

Threadless was always heavy on the Cialdini principles like scarcity. So I can imagine they tried the other principles as well. I wasn’t on their team, so I am only guessing here. But they could have wanted to experiment the Cialdini principle of liking.

Where to start? In a brainstorm they wanted to have people spend less time before they added a product to the shopping cart, reasoning it would make it more likely people would in the end buy (that would of course address another metric, with more complex data to test).

To get people to add a product quicker to the shopping cart they finally got excited about the option to make the shopping cart itself more playful and inviting. Their products are playful and so they figured their audience would also be open to such playfulness.

That’s where someone had a Eureka moment and thought of personifying the shopping cart and trying to emotionally blackmail people in adding something to the cart. Does this sound credible? You tell me. At least we have a better way of talking about it and running our own experiment to see if it works for in our context.

Selling More Chanterelles (or Any Other Variable Priced Good)

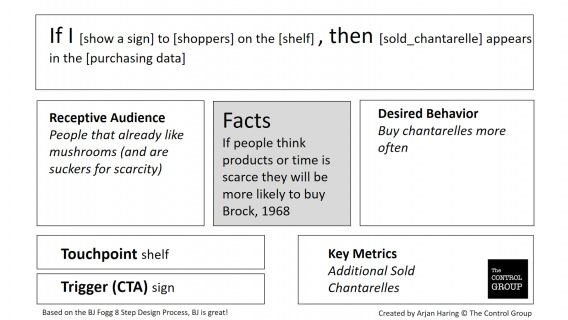

How ever much I would like to, I can’t use any experiments and data from clients to show how easy you can create an experiment with the canvas, so let’s use a hypothetical experiment of selling a product which prices fluctuates a lot: Chanterelles.

Around September, when it’s the season for chanterelles, the prices will drop immensely and prices vary daily.

What kind of experiment could we run to make existing mushroom lovers buy more Chanterelles?

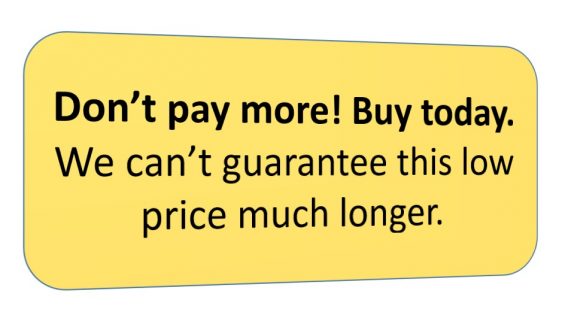

As said we are not using data from prior research or other client’s data source, so I will use Cialdini principle of scarcity as a fact to base my new experiment on.

The actual implementation of this experiment could look like this sign below, but there are many more alternatives that you could test separately.

As said. the most important win with the Experiment Canvas I see is that people and teams can more clearly communicate their ideas (also to separate data or analytics departments), that marketers can systematically create new experiments and that the focus is always on the facts. It’s a way to marry creativity with data and get the best of both worlds.

It’s very humbling to see how easily the experiment canvas gets accepted by marketers. In most cases after the first contact with the canvas marketers print copies and start using it for their own experiments. The organized way of dealing with experiments does get noticed by the management.

But higher management needs to give their full support to the idea of innovation through experimentation before you can optimize the experimentation process. Big things start small and every experiment that the canvas helps facilitate is a lesson learned.

Conclusion

When you want to jump on the experimentation train and you want to develop an experimentation mindset within your organization, you actually can. Start competing with the best out there by taking some easily to follow steps and generate tons of sensible experiments. Learning from these experiments will take a bit more effort, but at least you laid the groundwork.

I am still learning every day how to improve the ability of organizations to learn from experiments and I would love to learn from you.

Would do you think of the Canvas? And of the quality of Threadless T-shirts?

This kind of article gives me goose bumps. It truly touches my soul.

I like the systematic approach. I love the exampples.

Thanks Arjan

Wow Ewald, that’s very nice to hear. Keep up the good work!

I will test it out in class tomorrow.

Bram, cool! From testing things get better ;) And if you need materials you can used the BJ Fogg paper I used. Where I use touchpoint you could check how BJ used (technology) channel. Would love to hear how it works out for you!

I love this! I also think you could further help businesses organize their testing process by adding a complementary slide to the canvas in which they document the experiment’s results, what they’ve actually learned about their target customer from it, and what new tests could be run based on what they’ve learned.

From what I’ve seen, so many companies (big and small) take a wildly sporadic approach to testing: they run tests, only take note of whether it succeeded or failed, and then start fresh with a completely new test, inspired by a totally different train of thought/research study/internal meeting.

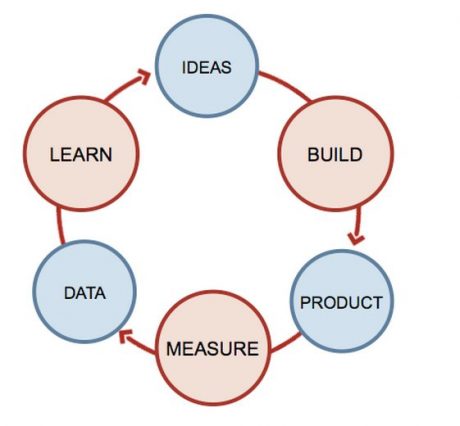

Ideally, I think most businesses would benefit from adhering to a more disciplined, progressive cycle of: looking at the data > generating hypotheses > running tests > examining the data from that test > generating new/more informed hypotheses from that test data > running a new/more informed test, etc.

In any case, this canvas is a great addition to the organized tester’s toolset! Thanks!

Hello Momoko,

You are so right!

This is only a first step of a systematic cycle of experimentation. You describe an ideal process, loosely some companies might do this, but I have yet to find it practiced rigorously in an organisation.

Not even in the “famous” conversion machine-type companies.

This is my next big challenge; get organizations to adopt the rigorous version of business experimentation.

Thanks again for the nice words!

Hey Arjan, thanks for your post. Very insightful. Was looking at PersuasionAPI and was wondering whether a site should be using different persuasion tactics for different users?

Hey Ben, good question!

PersuasionAPI (machine learning technology) determined per individual user what the most persuasion tactic was. This was based on the fact (in this case scientific research done by Maurits Kaptein & Dean Eckles) that different users react differently to various persuasion tactics.

So, yes, I would advice to run tests that try to see whether in your context it would make sense to personalize persuasion tactics.

Loving this clearly described systematic approach. It is sometimes difficult to find solid content on processes rather than just tactics related to subjects like growth hacking an lean. Definitely going to play with it right away by printing a few copies. Thanks a bunch Arjan!

Hi Martijn,

That’s great to hear! You are not the first that prints a few copies and gives it to colleagues. It was the main idea behind sharing it this way.

Would love to hear your experiences with the canvas!