You’re running lots of tests? Great stuff.

Now, here’s a piece of the puzzle you may not have thought about: what is the most appropriate way to archive test results?

Surely, any mature organization can use insight from past test results as an indicator of where to go in the future. Trouble is, there is no single correct way to do this, and barely anyone is talking about how to do it well.

Table of contents

Why Archive Old Test Results?

If optimization is, as Matt Gershoff put it, about “gathering information to inform decisions,” then part of that process is documenting what you’ve learned. In fact, this isn’t just applicable for test results. It’s important for qualitative research, such as customer surveys, and it’s equally important for anything that brings insight to your decision making.

Really, there are two main tangible benefits to archiving old test results. The first has to do with regular reporting and is more applicable to communication. As Manuel da Costa put it, “You have an audit trail of everything you have done for that client – so you can show them the value of your optimization efforts.”

The second is about knowledge management, supporting test ideas, and evolutionary learning.

You Need To See It To Believe It

A scenario you might be familiar with:

You’ve got the initial buy-in for a testing program (or you’ve just started working on a client’s site), and you’ve made some substantial lifts. You’re learning more and more about your customer base, and each test is bringing you more insight, which will lead to more revenue.

The problem: how do you communicate these results, clearly, to executives?

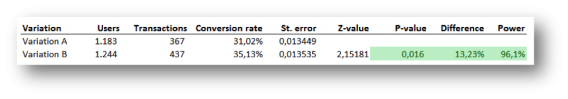

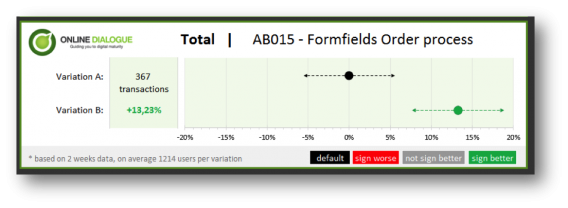

We recently published a great post by Annemarie Klaassen and Ton Wesseling that told their detailed journey of visualizing A/B tests results for clearer communication. You can (and should) read the whole post later, but for quick reference, here’s what they started with:

And where they ended up:

The clearer you can communicate ROI, the more organizational buy-in you can receive, leading to a stronger testing culture.

Joanna Lord gave a great speech at CTA Conference this year, where she talked about fostering a better culture around optimization. Her third point honed in on the need for reporting, because, as she said, “you need to see it to believe it”.

At Porch, she says, every week they have weekly test roundups, and each report is led by insights (which is above even revenue). As Joanna said:

Joanna Lord:

“If we’re going to plan for the future at all, we better have a lot of data that someone’s brought into the room, so that we’re all working from the same place, we all start from the same place.”

So their reporting accumulates, and even tests from a year ago can bring insights to current test ideas.

Knowledge Management and Evolutionary Learning

Now there’s the second side of reporting: using the archives as a database of accumulated knowledge.

As Manuel da Costa from Digital Tonic put it, “Ultimately, documenting also serves as your own testing library that you can dip in and out of when brainstorming in other projects. There is accountability and also helps maintain the trust with the clients you work with.”

Martijn Scheijbeler, Director of Marketing at The Next Web, echoed a similar sentiment, placing emphasis on the fact that all of the knowledge can be put in one place where everyone can benefit from it:

Martijn Scheijbeler:

“Having 1 place where all the data can be found by both the CRO team that is working on testing but also that we have data to the rest of the organisation to explain to them what the test entails, how we tracked it and what was the hypothesis on something. So it’s kind of a backup of history on what we’re doing but also could help us defend what we’re working on.

As it’s a planning tool as well we have all our ideas from the whole organisation in 1 place and that makes it easy for us to reschedule ideas if needed.”

How Mature Organizations Archive Test Results

There’s no one way to do it. While one organization may prefer Excel and Trello, another may have a built in process complete with a custom tool to track all tests.

We mentioned Porch, above, who spends Sundays documenting results, insights, and other pertinent information that goes into a database of past test results. Though I’m not sure on the exact tools they use, it seems like a more manual process than some other organizations.

That’s what’s interesting about archiving test results: there’s no correct way to do it. All that matters is what works best for the efficiency of your team.

The Next Web

The Next Web is a powerhouse in tech news, and their growth/optimization team is efficient. Here’s how Martijn Scheijbeler described their reporting and archiving process:

Martijn Scheijbeler:

“Obviously every test that we run is documented to make sure in 6 months we still know what test we ran, at what time, and what it was about. As we try to keep up the velocity and run approximately 200+ tests this year, it’s of great need to us.

To keep our testing documentation as structured and available as possible we’ve decided to built an internal tool for this. Both ideas and actual experiments are being added in by the team who is responsible for it. We can easily track the backlog with ideas and move them to a real experiment when the time comes.

We save quite some data on a test: it’s ID, name, Device category, Template, Experiment Status (Running, Finished, Building, etc.), Objective Metric, Owner, Expected Uplift, Start Date, Run Time, Implemented Date, Description, Hypothesis and the Tracking Plan. This data is then automatically extended with data on our current goals in combination with the objective metric – and we also know the end date based on the start date + run time.

In addition to the experiment data we save two extra field per variant – if it was winning and a screenshot of that variant. Based on this we extend our information with the reporting data on our tests.”

GrowthHackers.com

GrowthHackers.com recently outlined a growth study on how they began high tempo testing and how it revived their growth. This entailed 3 experiments a week, including new initiatives, product feature releases, and of course A/B tests.

What is high tempo testing? As Sean Ellis put it:

Sean Ellis:

“My inspiration for high tempo testing comes from American football and the ‘high tempo offense.’ Perhaps the best example of this is the Oregon Ducks’ college football team. From the moment they hit the field they are go, go, go… The opposing teams often end up on their heels and Oregon is able to find weaknesses in their defense. It’s exciting and frankly kind of exhausting to watch. High tempo testing is approached with a similar energy and urgency to quickly uncover growth opportunities to exploit.”

So as much as I hate the Ducks for beating Wisconsin in the Rose Bowl a few years ago, you can see how archiving results would be beneficial in their case. Running tests at this volume and velocity, it’s important to fuel your tests with as much insight as possible, so as not to waste any valuable time or traffic.

The above image is from Growth Hackers’ new tool, Canvas, which helps support the whole process (including archiving results). Not only does it archive results, but also ideas, hypotheses, etc. This makes it easier for members of the team to extract insights from past tests, and it also lets new members quickly onboard by analyzing what has and hasn’t been tested in the past. Here’s how Sean put it:

Sean Ellis:

“All of the completed experiments are ultimately stored in a knowledge base so we ensure that we capture this learning and don’t keep repeating the same tests. The knowledge base is also very helpful when adding new members to the team, so they can understand what has been tested, what worked and what didn’t.

The analysis has started to become a bottleneck for us, so we recently added a role that is responsible for analyzing the completed tests and managing the knowledge base. Previously this responsibility was shared by the team.”

Digital Tonic

Here’s Manuel da Costa explaining how their reporting process has evolved:

Manuel da Costa:

“We basically documented everything – from ideas & observations to test plans and ultimately results and reports.

We used a patchwork of tools like Trello and Google docs and Basecamp to keep information on each project (client).

We would do a weekly standup with the client to show what’s been going on as well as presenting any test results via powerpoint, etc.

Compiling reports was a time consuming process mainly because we had to dig through so many sources.

So talking about the patchwork of tools we used – it was ok but not very efficient. It all came to a head when a client asked us to find all the experiments we had run for a certain criteria (let’s say for example purposes it was social proof).

We had run about 50+ experiments we had run and finding that one piece of info was taking longer than expected. Actually, it took us 2 hours. 2 hours that could have been better spent brainstorming new ideas or setting up new tests.

We created Effective Experiments to tackle that problem.”

So in summary, in effort to track everything and report it back efficiently, they created a tool to help save time from manual reporting with a patchwork of common tools.

Data for Decks

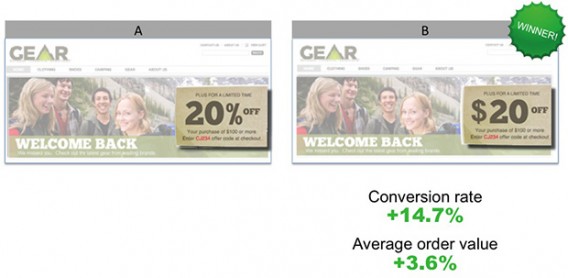

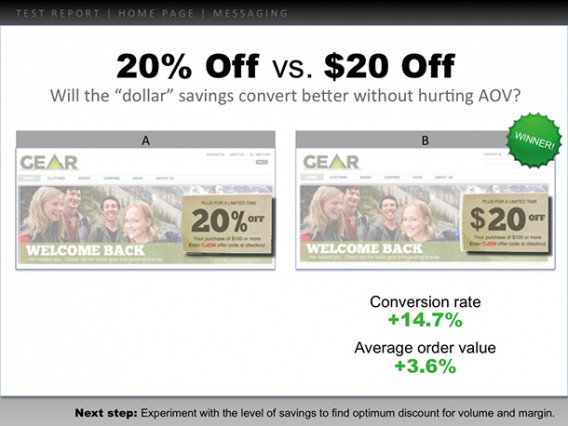

While this is more of a reporting solution for executive understanding, it’s also a solid way to archive test results for learning. Chris Tauber, chief analyst at Data for Decks, wrote a post on Monetate’s blog that outlined a simple 5 step reporting process that uses Powerpoint to explain results:

1. Capture full screenshots of “A” and “B.”

2. Highlight what’s being tested.

3. Align the hypothesis to the metrics.

4. Show only the metrics that matter.

5. Put these pieces on one, and only one, slide.

And of course, you can save these slides as a high-level overview of tests results, and possibly combine this visual with other tools we have listed above (and more that I’ll list below).

Tools To Help You Out

Canvas

As mentioned above, GrowthHackers built Canvas to help with the growth team’s project management. Here’s how Steven Pesavento, GrowthMaster at GrowthHackers, describes the tool:

Steven Pesavento:

“We built Canvas because we needed a tool to manage our growth process when spreadsheets weren’t enough.

Our core growth team uses the product every day to add new ideas to our growth backlog, manage the status of each growth experiment and for documenting our learnings from each test. I see it as our growth control center because each morning, as the GrowthMaster, I login into my dashboard to see the status of our tests and then dive deeper into each one that needs my attention.”

Iridion

Built by konversionsKRAFT with the purpose of organizing the entire testing process, Iridion is a sophisticated tool for archiving test results. One of their benefits listed on their site is that the tool can, “Record all of your test results in a constantly growing archive. Make sure that new team members immediately know what has been tested previously and how successful these tests were. Use these findings for follow-up tests.”

Here’s how Andre Morys describes the tool:

Andre Morys:

“Iridion is much more than just an insights database and workflow management. We will add our knowledge from thousands of a/b-tests to help growth hackers and optimizers building stronger experiments with higher uplifts. For example, Iridion will contain a library of 200+ psychological behavior patterns and will include qualitative methods to improve text concepts even before you test them.”

So Iridion is aimed at improving quality of tests as well as workflow. As Andre told me, “I don’t share the idea of “high speed testing” – High impact and success rate is economically much more important than high frequency.”

Effective Experiments

As mentioned above, Manuel da Costa built Effective Experiments to help conversion optimization project management. Here’s how Manuel describes the tool:

Manuel da Costa:

“A single platform to manage the entire optimisation workflow that would help CROs to store their ideas, test plans and results all in one place. We even went further by creating a lot of automated features that will save CROs time – such as automated reporting and integrations with AB testing tools etc.”

So, it’s an all-in-one workflow tool that will make reporting and archiving much, much easier.

Trello & Excel

No one said archiving test results had to be fancy. In fact, Excel is probably (though I have no data to back this) the most common way organizations archive test results. Josh Baker wrote an in-depth post on how he documents A/B test results using excel, along with what exactly he documents.

We use Trello for certain projects at CXL. It’s also possible to enact a combination of Trello, Excel, and say, the Data for Decks Powerpoint example above, which will give greater visual clarity to non-optimization team members and executives.

Your Testing Tool

There are a multitude of ways you can integrate your documentation process with your testing sool. Here, Leonid Pekelis from Optimizely, explains:

Leonid Pekelis:

“Optimizely does have an “archive” button for experiments, but what that does is remove the experiment’s code from a customer’s website. This stops the experiment, which means no new results will come through. Customers can un-archive anytime they want.

We have found differing needs and approaches to sharing and storing test results across organizations. We try to make it easy for customers to start their own approaches without imposing any one solution by providing how-to articles, and templates.

More generally, archiving test results lets an experimenter do three really important things: save knowledge gained from experiment results to motivate future tests and decision making, disseminate results across the organization to increase impact of tests, and finally spread testing culture. A good process for storing and sharing tests is definitely a keystone to a successful A/B strategy.”

There’s a whole discussion on Optiverse about archiving test results. Read it if you’re looking for ideas for your own organization.

VWO also has ways to archive test results. Here’s Paras explaining:

Paras Chopra:

In VWO, we give a couple of options around knowledge management. Couple of things we enable in VWO:

- We have notes associated with each campaign, so before setting up the test you can record your hypothesis and after the test is done you can record your interpretation of results

- We have Gmail-type labels that you can apply to campaigns. So you can categorize your campaigns such as: CTA-tests, major-impact and whatever else you desire according to your company’s CRO process

- We have annotations in the chart, so you can annotate on a graph if you see any spikes in conversions/traffic and mark it for your other colleagues to see

- We also have a campaign and account timeline so you can see your entire history of which campaigns were started and who did what

Limitations of Learning From Past Tests

Archiving past results, and particularly managing and analyzing them, is time consuming. With any time investment, you’d hope that the ROI would be positive. One of the main questions you’ll ask yourself when it comes to learning from past tests is, “how relevant are the learnings from last year’s tests?”

Martin Scheijbeler says that, though there are some limitations on past tests, in general the benefits outweigh them. Here’s what he had to say:

Martijn Scheijbeler:

“There are some limitations. But, in 6 months, the context of a certain test can be completely different if you were to re-test it anyway – as a dozen elements on the page could have been changed in the meantime. That means a re-test is never really a re-test, in our opinion. But it’s still something you’d like to know to make sure you don’t miss certain tests. We also hope that we can learn from these tests, particularly what areas usually have a higher result in testing than others, in order to know what works better for future tests.”

Manuel da Costa agreed, mentioning that learnings from past tests are valid, yet they have to be taken with a grain of salt due to external validity factors:

Manuel da Costa:

“Are those insights still valid?

Yes and no. Some insights tied to seasonal trends were valid whilst some no longer held their weight because of external changes in the marketplace.”

Seasonality, traffic sources, PR, and other external factors are things you need to worry about no matter what, though. It’s not just in analyzing past results that they matter. If you were to indicate, in detail, these details on your reports, then you can factor them into your analysis.

Steven Pesavento doesn’t see these things as ‘limitations,’ necessarily. Even though a channel or tactic may change, learning from past tests is a necessity for the GrowthHackers team:

Steven Pesavento:

“Limitations are really based on how a channel changes or whether certain tactics are still relevant. These changes could happen over the course of weeks, months or even years.

At GrowthHackers, we regularly review our knowledge base searching for opportunities to retest old ideas with new strategies. I don’t see it as a limitation as much as just a part of the testing and learning process. Much like the growth process, where we continue to test new channels as they become available, we have to continue learning from our past tests.”

Conclusion

Archiving test results is important because it allows for clearer reporting and communication, and because it gives you a knowledge database from which you can extract insight.

However, unlike A/B testing statistics, the rules of execution are bendable when it comes to archiving results. There is no one way to do it, and most mature organizations do it just a little differently. As long as you’re tracking the right data, the data that is pertinent to your growth, then the method by which you do so is of secondary importance.

Some have developed sophisticated in-house tools to solve the problem, some use their testing tool, some purchase external tools, and some are still using good ol’ Excel. In the end, it’s up to you and what works best for your team.

Since this article is more of a discussion than a how-to, I want to ask: how does your team document and archive test results? What kind of struggles and bottlenecks do you face in the process?