What do you do when you have a low traffic site? Can you still run A/B tests? Well, it depends what you qualify as a low-traffic site. You need to have some volume, of course. And there’s higher risk involved without the surplus of data.

But sometimes you can still test.

While it’s easier to test when you have a million visitors a month and a thousand sales a day, that’s a luxury not a lot of small businesses and startups experience. But of course, we all want to optimize our sites and make data driven decisions.

So how is it possible?

Table of contents

First off, if your traffic is too low to test, you don’t need to run tests to optimize your site. You can do many other things such as:

- Heuristic analysis

- Remote user testing

- Customer interviews and focus groups

- Five Second Tests

You can also invest in traffic acquisition (SEO and CRO work well together). If you do all these things, you can certainly make valid gains.

But if you have a certain level of traffic (this level is different depending on a few factors) you can run A/B tests too – though that’s at the top of the low-traffic optimization pyramid (meaning you have to cover the bases first).

You just need to get really big wins, which means you probably won’t be testing button colors (or shouldn’t be at least).

Even if you’re not Amazon, if you do some conversion research and test big things, you can still take part in optimization.

Meet Bob & Lush:

Bob & Lush is a premium dog food supplier in the UK, with an e-commerce site active in several countries in Europe. They pride themselves in using 100% fresh meat and real vegetables. They sell the highest quality dog food without any fillers, sweeteners or artificial preservatives for dog owners that truly care about their dogs.

After conducting conversion research (using the ResearchXL framework) and running several treatments on their site, we achieved a stable conversion rate uplift. Below we’ll introduce a treatment run on their shopping cart page that resulted in a conversion rate uplift of 13.5%.

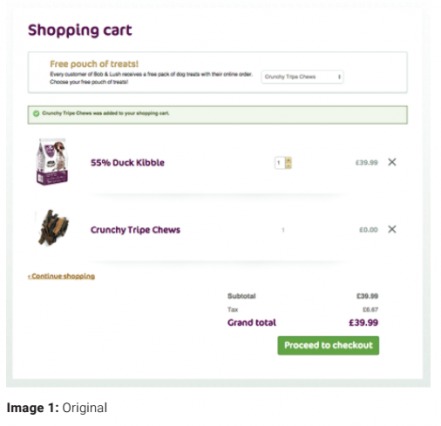

First, here’s the initial shopping cart page:

Upfront, I’ll let you know we tied three things into our treatment:

- Social proof

- Benefits-driven copy

- Payment information trust and clarity

We arrived at these solutions by means of multiple forms of conversion research as well as heuristic analysis.

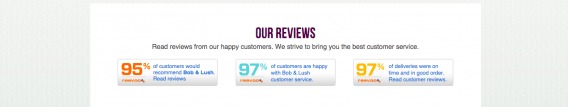

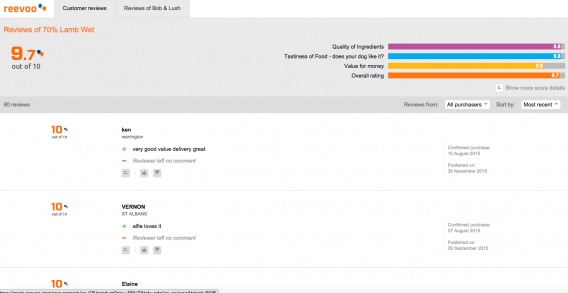

If you head over to the Bob & Lush homepage, you’ll notice some prominent social proof in the form of customer reviews:

This was a test we’d run previously on their site, and it won big. We used Reevoo, which is not only a big name and well trusted in the UK, but allows for live reviews (instead of static ones). Generally, these reviews are perceived as more trustworthy since anyone can simply submit a review (as opposed to having the company self-select flowery reviews).

Since this worked so well on the homepage, we decided to test it further down the funnel and add social proof to the checkout as well. Reevoo also opens the reviews in a different tab as well so it doesn’t interrupt the checkout flow:

Because Bob & Lush is a premium brand, they aren’t really competing on price. Therefore, we needed to make the benefits of purchasing with them very clear.

Through user testing and our customer surveys, we were able to uncover some of the most common reasons people love purchasing from Bob & Lush as well as the common doubts and objections they have.

First, we extracted some of the exact language people used on the copy. People said they loved the company because it was “100% natural, no rubbish,” so we injected that VoC right in the copy.

Second, 4 out of 4 user testers were frustrated at the lack of clear shipping and delivery information. They didn’t know how much it costed or when it would arrive. So we added some prominent information that featured one of the site’s biggest value offers, “free next day delivery.”

All user testers were impressed with the pouch of free treats. It was a positive surprise for most of them.

Previously, the site’s CTA was a simple proceed to checkout. Through heuristic analysis, though, we added a few ‘best practices’ that boosted trust and clarity in the checkout process.

For one, we added icons of all the types of payment the site accepts. This removes the doubt of, “do they accept AMEX?”

Next, we added a Sage Pay icon. Sage Pay is a highly recognizable icon in the UK – actually it’s the biggest independent payment service provider in Europe – and therefore it embodies trust.

So we tied together the three solutions we uncovered into one treatment with the following hypothesis:

Adding benefits, social proof, and payment methods to the shopping cart page will improve the perceived value of the products and lead to more purchases. Social proof ensures people that the dog food is actually good, benefits remind people of all that they will be getting extra, and Sage Pay plus credit card info improves trust/clarity and draws more attention to the “Proceed to checkout” CTA.

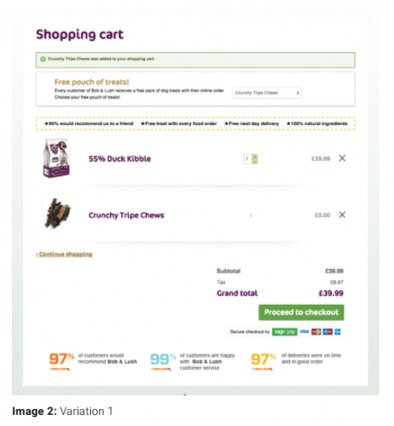

Well, the article headline gave away the results, but check out the variation:

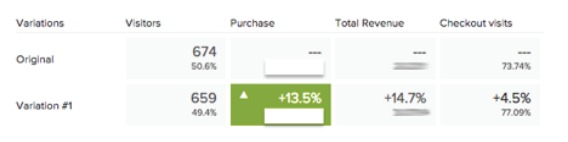

And here’s the test result:

After multiple business cycles and more than 1300 visitors tested, we concluded that Variation #1 converts 13.5% better than the original page. Other goals are also positive in the same direction. Revenue improvement is 79% statistically significant and shows an AOV uplift of 15.3% from 25.83 pounds to 29.79 pounds.

Since the revenue improvement is only at 79% significance, I must mention that there’s a chance there is no real difference in revenue improvement, but it’s almost certain the revenue isn’t going to decrease. Because we had a statistically valid result in conversion rate improvement, we see it trending in a positive direction, so with a lower traffic amount it’s worth taking the risk. At best, we get a significant revenue improvement, at worst there’s no real difference in revenue.

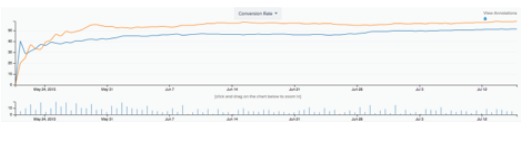

Looking at the conversion rate throughout the testing period then we can see a stable uplift that also reassures us of the treatment validity:

While we’re confident in our results here, when you’re doing testing on low traffic sites there are some specific concerns you need to keep in mind.

One of them, something I covered briefly, is achieving valid results with a low sample size. You can calculate needed sample sizes with an AB test calcuator. This is solved mostly by testing things that will likely result in more drastic effect sizes.

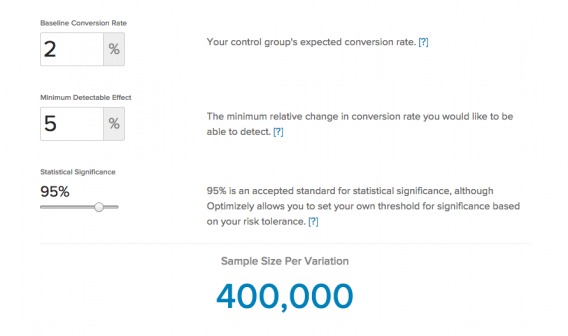

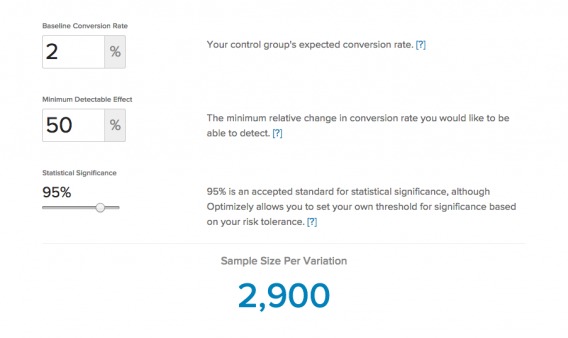

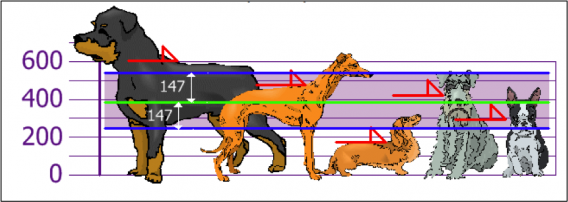

Here’s an illustration with Optimizely’s sample size calculator:

You can see that for a site with a 2% baseline conversion rate, you’d need 800,000 unique visitors to see a 5% minimum detectable effect. However, when you bump that up to 50%, you need significantly fewer visitors:

Another thing to worry about is sample pollution. If you run a test for too long, people will delete their cookies, and if they revisit your site will then be randomly assigned to a test bucket. It happens even in shorter test, but becomes a problem when you run a test for too long (read our article on sample pollution to learn more).

The first tier in our optimization pyramid is that you’re accurately tracking everything on your site. When it comes time to pick things to test, you’ll want to have an accurate source of data to feed you insights.

So start out by implementing an analytics package (and doing an analytics health check). Begin a mouse tracking campaign. Look into getting some user testing data. Organize your data in a way that is actionable when it comes to prioritizing test ideas.

With low samples comes the increased ability for outliers to ruin your day.

Especially during the holidays or other external factors that may influence purchasing behavior, it’s important to track and remove outliers from your data. Kevin Hillstrom from Mine That Data suggests taking the top 5% or top 1% (depending on the business) of orders and changes the value (e.g. $29,000 to $800). As he says, “you are allowed to adjust outliers.”

Finally, as I showed in our case study, you can’t test minuscule changes. We had to wrap together 3 different hypotheses, and we saw results.

There’s palpable disillusionment when a startup founder reads a case study about a fellow startup getting a conversion lift of 200%+ (which was most likely a false positive), and then later fails to even reach a significant result. Well, it turns out many of them are testing tiny changes.

Long story short, if you have low traffic, you have to test big stuff.

It’s an A/B testing best practice to change only one element per test. This way, you can learn about which elements actually matter, and we can attribute uplifts to specific treatments. If we bundle multiple changes together, you can’t accurately pinpoint which element caused how much improvement.

However, testing one element at a time is a luxury for high traffic websites. The smaller the change, the smaller typically the impact is. For a website making millions of dollars per month a 1% relative improvement might be worth a lot, but for a small site it’s not. So they need to swing for bigger improvements.

The question you should ask is “does this change fundamentally change user behavior?” Small changes do not.

The other factor here is that with bigger impact, e.g. +50%, you need less sample size. Low traffic websites will probably not be able to have enough sample size to detect small improvements, like say 5%.

So the question is, if we can increase sales through bundling tests, is it worth losing the learning factor? Yes. After all, the goal of optimization is to make more money, not to make science. [Tweet It!]

Optimizing a low traffic site isn’t easy, but it’s possible.

In the case of Bob & Lush, we had been working on their site for a while before so we had accumulated a lot of knowledge of what works and what doesn’t with their customer base. One thing that brought strong results had been social proof, so we thought implementing that throughout the checkout process would be beneficial.

Conversion research then suggested that clear product benefits and clear/trustworthy payment icons would be a positive influence.

All of this combined lead us to a statistically valid uplift.

.svg)