Having a well-thought-out plan for A/B testing Facebook ad campaigns is essential if you want to improve your performance reliably and consistently.

And the more you test, the better. A study of 37,259 Facebook ads found that “most companies only have one ad, but the best had hundreds.”

A/B testing Facebook ad campaigns can get complicated quickly (and easily produce invalid results). Spending the time upfront to perfect your testing process and structure will go a long way.

The structure of a Facebook ad campaign

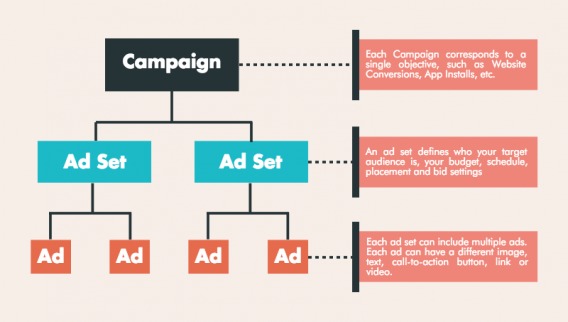

To develop a solid testing plan, it’s important to understand the structure of a Facebook ad campaign. The image below shows the three ‘levels’ of a campaign and describes the purpose of each one.

1. Campaign

The campaign is the overarching container that defines the objective of your Facebook ads.

For example, if you want to bring people from Facebook to your website and convert them to subscribers or customers, you would choose the objective ‘Website Conversions.’

For the purpose of A/B testing, my preference is to limit the scope of testing to a single campaign. Running A/B testing across multiple campaigns isn’t a necessity and makes it more difficult to analyze the data.

2. Ad set

An ad set defines a particular target audience. It is also used to define your budget, schedule, and bid type.

A campaign can contain multiple ad sets, and each ad set can target a different audience, but it may also target the same one. Most campaigns will contain multiple ad sets targeting multiple audiences.

3. Ad

The ad is the creative that people see on Facebook or Instagram. It’s possible for each ad set to contain multiple ads.

There are several components to a Facebook ad: the image or video, the headline, the body text, and a link description.

Creating an organized testing process

To optimize your campaigns effectively, you will need to test multiple ad sets (target audiences) and multiple ads for each ad set. This can get very messy very quickly without a plan and without understanding a few best practices.

There is no 100% correct way to do this—everyone has a different method. This is my preferred method based on data and my own past experience.

Your testing options are exponential

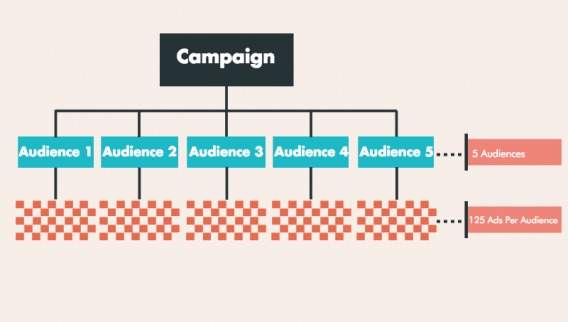

First, let’s look at the numbers. If you break down all of the different possible combinations for testing, the number of possible tests is quite daunting.

For example, let’s say you have five different target audiences to test running ads against.

And for your ad creatives, you have: five different ad images, five different ad headlines, and five different blocks of ad text.

That gives you 5 x 5 x 5 = 125 different possible ad creatives to test across your five target audiences, which means you have a total of 625 possible combinations to test.

That’s not to mention if you split your target audiences up by demographic details such as gender, age range, and location.

Taking the same example and splitting our five target audiences up into…

- Male and female;

- Ages 18-25 and ages 26-32;

- Australian and American.

…takes the total possible combinations to 5,000 (40 ad sets x 125 ads).

Start broad, narrow down based on data

To make the process manageable, you need to simplify things and limit the number of A/B tests you choose to run at any given time.

You can do this by starting with a broad audience and a narrow your selection of ad creatives. You then use the wealth of data that Facebook provides in its ad reporting to further refine your A/B tests over time.

Facebook actually provides a breakdown of your ad performance based on location, age, gender, ad placement, and more. You can use this data to simplify the testing process.

Here is how this looks when applied in a practical situation.

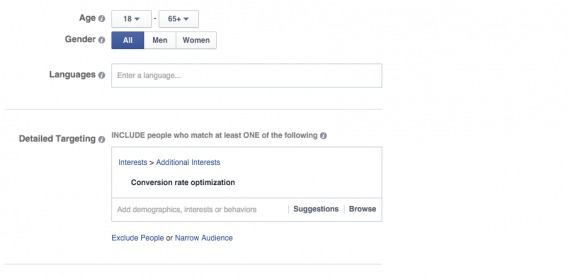

You would create a new ad set and target an audience based on a particular ‘interest’ on Facebook. Rather than only selecting a single gender or a narrow age bracket, you would leave these set to “All” and “18 to 65+.”

Because the Facebook reporting tool will tell you which gender and age bracket converted best for this ad set, you can analyze that data and exclude the underperforming demographics in your next test.

Molly Pittman, Digital Marketer:

“In terms of age (and gender), a big misconception a lot of people have is that they MUST define their audience by these parameters.

Most of the time, if you do this, you’ll actually hurt your campaigns by making the assumption of the age/gender of your audience.

For example, our sister company Survival Life thought their target market was older, white males, and that’s who they were targeting on Facebook. But one time, on accident, they forgot to select males as the target (so they were targeting both men and women).

Do you know what they found?

Their best converting ads in that campaign were from women—the more you know…

That’s why, whenever we set up ad campaigns in new markets, we leave the Age and Gender options totally open and…

….let the data and the results tell us the age and the gender of the people who are actually going to convert.” (via Digital Marketer)

Prioritizing your A/B tests

Now let’s talk about what to test first.

AdEspresso studied data from millions of dollars spent on Facebook ad A/B tests and listed the elements that provided the biggest gains.

Here are the four things they found mattered most when it came to ad targeting:

- Country;

- Gender;

- Interests;

- Age.

As you can see, interest targeting came in third, behind country and gender. But because it has such a big impact on the success of a campaign, interest targeting is the first thing I focus on when A/B testing.

As mentioned above, gender and age are best left set to ‘all’ so we can use the report data to determine which performs best later.

How to A/B test interests

When testing ad targeting options, you should only select one interest or behavior per ad set unless you are using the “Exclude People” or “Narrow Audience” options.

Why?

In the image above, you’ll notice above “detailed targeting,” it says: “INCLUDE people who match at least ONE of the following.” That means if you select multiple interests or behaviors, a person only has to match one of them to be targeted by your ad set.

However, the Facebook ad reports can’t be broken down by interest or behavior. That means if you include more than one interest or behavior in your ad set, you will be unable to measure their performance individually.

One of your selections may be performing really well, while the other performs poorly. You’ll see a mediocre ad set, but will never know that you had actually found a high performing interest or behavior.

Use separate ad sets

To test different interests or behaviors, I recommend using a separate ad set for each one. This allows you to see exactly which one is performing best with the ad creatives you are using.

Mike Murphy, business coach at Brave Influence, had this to say on the topic:

Mike Murphy, Brave Influence:

“Many folks will take their research from step one, gather their interests, and then lump them all into one big list on the Facebook Ads Manager in hopes of reaching a large target audience. This is a grave mistake that will cost you far more in ad spend. And while you might get results, you’ll have no idea which interest brought the best results.

The solution: Include one interest per ad set so you can look out for the interests that bring the best results at the lowest cost.” (via Entrepreneur)

How to A/B test ad creatives

We’ve covered audience targeting. Now let’s take a look at how you can test your ad creatives.

Before we move on, it’s important to note the way Facebook treats multiple ads in a single ad set because it affects the way we conduct A/B testing.

Having multiple ads in an ad set means that Facebook will automatically A/B test both of those ads and determine a winner using its own algorithm. Once Facebook determines a winner, it automatically scales back the number of impressions delivered to the losing ad.

However, this can be problematic because a winner will often be automatically determined based on a very small sample size…

“One of the biggest challenges with split testing Facebook ads is that Facebook determines very early on in your advertising which of your ads is performing the best.

This usually isn’t an issue, however it can make testing multiple ads at the same time somewhat ineffective; this is because Facebook will often give the lion’s share of impressions to your best performing ad (at least in terms of clicks), and stop showing your lower-performing ads.” (via Kim Garst’s Blog)

Example: Facebook calls it… Incorrectly

In the example below, you can see just how early Facebook makes a decision on which ad is performing best.

After only showing the ad to 722 people, Facebook had already scaled back impressions for second ad variation, only showing it to 334 people (compared to 680 people for first ad variation). You’ll also notice the disparity in budget allocation between the different ads, with Facebook spending $7.27 on one ad and only $1.58 on the other.

But what’s most interesting is the cost per website click (the goal of this campaign). The ad that was deemed the loser by Facebook was actually delivering cheaper results.

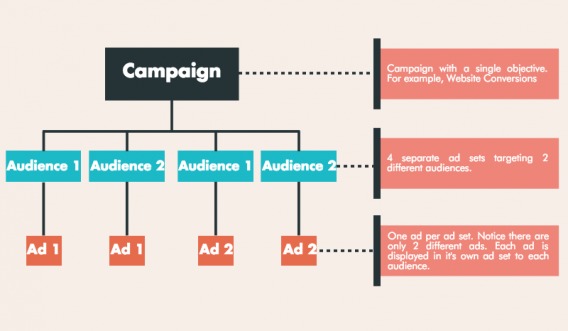

In order to get enough eyeballs on each of your ads and collect enough data to allow you to make intelligent decisions about your A/B tests, I recommend separating them into different ad sets.

Use separate ad sets (…again)

Instead of having a single ad set for a single target audience, I suggest having two separate ad sets targeting the same audience with a single, different ad in each. You can then let each ad run until you are satisfied with the amount of data available to you.

Once you make a decision on which ad is the winner, you can switch the underperforming ad set off.

Targeting the same audience with multiple ad sets can increase your costs due to the ad sets competing against each other in the bidding process, but this is minimal. It is also temporary because you will eventually turn off the ad sets that aren’t top performers.

Here is an example of what your campaign structure might look like with one ad per ad set:

What exactly to test

If we look back at the study from AdEspresso, it showed that when it comes to your ad creatives, these elements provided the biggest gains:

- Image;

- Post text;

- Placement (e.g., News Feed, right column, mobile);

- Landing page;

- Headline.

Based on that data, my preference is to focus on testing at least three different images and then one or two different sets of ad text.

This reduces the number of individual ads that are being A/B tested across different audiences at any one time, which simplifies the process. Once a winning audience and image combination is found, results can be incrementally improved by making changes to the elements that provide smaller gains, such as the post text and headline.

Obviously, the number of ad creatives you test depends on your overall budget, and capacity to create and analyze A/B tests. Larger budgets and more resources make it possible to test many more ad creatives at once.

How much does it cost to advertise on Facebook?

One of the benefits of Facebook ads is that you can start with a budget of as little as $1/day.

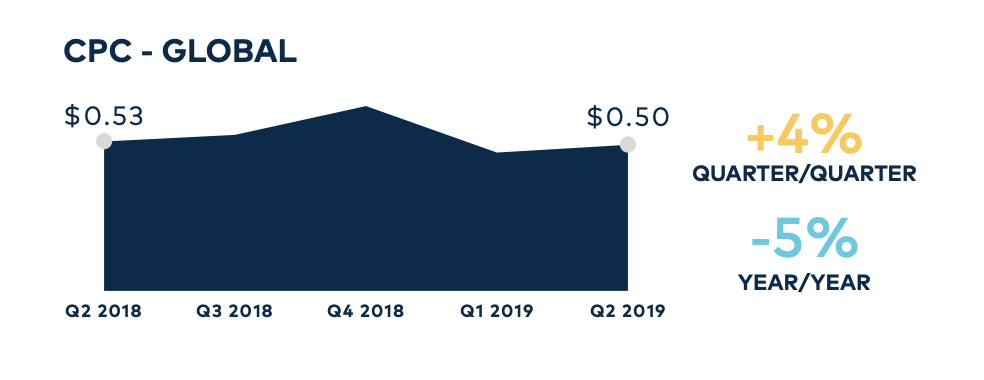

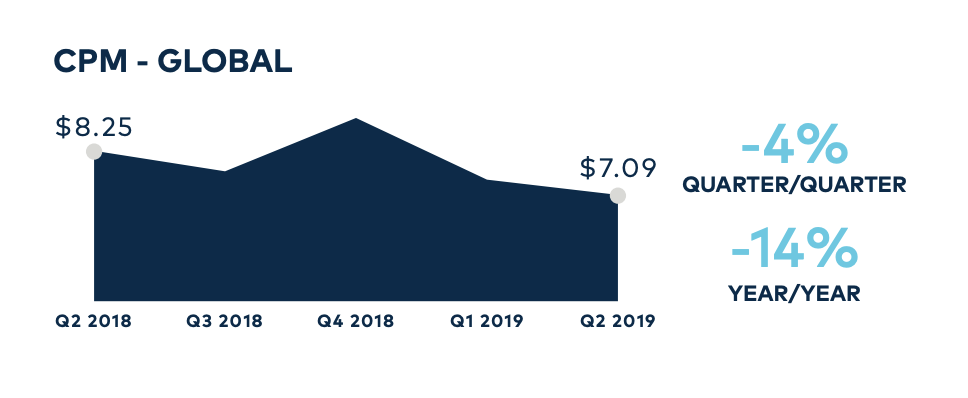

Costs are extremely conditional, but to give you a general idea of what you can get for your ad spend, let’s take a look at some stats from Nanigans’ Q2 2019 Global Facebook Advertising Benchmark Report.

- For Q2 2019, the global average cost per click (CPC) was $0.50.

- For the same quarter, the global average cost per 1,000 impressions (CPM) was $7.09.

Choosing the right metric

I recommend always focusing on the metric that’s most important to you instead of focusing on ‘fringe metrics’ like CPM. The reason I call it a fringe metric is because it feeds into primary metrics like cost per conversion (CPC).

If your goal is website clicks, focus on CPC instead of CPM.

If your goal is on-site conversions, focus on revenue instead of CPC or CPM.

Choosing one primary metric gives you a single measure of performance to use as a basis for your A/B testing.

When to pick a “winner”

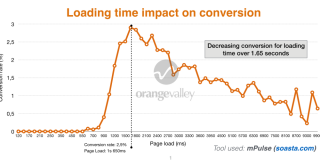

As an absolute minimum, you should let your ads run for at least 24 hours before making any judgements about their performance. This gives Facebook’s algorithm enough time to optimize properly.

This advice comes directly from Facebook:

It takes our ad delivery system 24 hours to adjust the performance level for your ad. It can take longer when you edit your ad frequently. To fix it, let your ad run for at least 24 hours before you edit it again.

That’s not to say you should judge the performance of all of your ads after 24 hours.

This is the absolute minimum required to allow Facebook’s algorithm to optimize. Because the algorithm only becomes optimized after 24 hours, I like to let my ads run for at least 48 to 72 hours.

You will also need to ensure that you have enough data for your results to be statistically significant and valid. You can use this great calculator to help determine when your test achieves significance.

The amount of time it takes to collect enough data varies. It’s possible to deliver tens of thousands of impressions and get hundreds of results in a matter of days, but that requires a relatively large budget. (Based on the average CPM figures we saw earlier, 20,000 impressions would cost $141.)

A smaller budget means few impressions and results over the same period of time, so it takes longer to get a statistically significant result. Be sure you also account for the risk of sample pollution.

Whatever your budget, always test variations to significance before determining the winner and moving on to the next test.

Conclusion

My recommended approach for both audience testing and ad creatives testing is to A/B test the elements that stand to provide the biggest gains first. For audience testing, that’s interests and for ad creatives, that’s images.

Only then should you start conducting further A/B tests on elements that are less likely to result in large performance improvements.

This simplifies the process and allows you to achieve the biggest gains in performance early on.

The most important things to remember are:

- The more you test, the better. That goes for target audiences and ads.

- Conduct your tests in a tightly controlled manner so you can accurately determine which changes are responsible for changes in performance.

- Only target one interest per ad set so you can see which interest performs best.

- For the most accurate results, only have one ad in each ad set.

- Use the data in the Facebook ad reports to further refine your ad campaigns.

“Based on the average CPM figures we saw earlier, 20,000 impressions would cost $127,000.”

Your math is WAY off here. CPM is cost per 1000 impressions. 20,000 impressions would cost $127.

Thanks Joe! You’re right, it should be $127. Massive typo there.

One thing I have ran into before is problems with running tests to too limited of an audience. You may find your numbers to be skewed if you hit an ad fatigue barrier before you hit statistical significance, this will certainly irrationalize your ad testing data as a result.

One good way to see if this is a problem for you is to widen your targeting again once you run a test “just to see” and view what your data tells you once you think you hit the motherload of test result. For campaigns that are not retargeting driven, the first time your ad is seen, typically the better the response will be if the ad copy you have going on is on point and thoroughly vetted for performance beforehand.

That’s true Corey, you will run into problems if the audience size is too small. The thing to note here is that the definition of ‘too small’ changes depending on how much you’re spending. The more you spend, the quicker you ‘exhaust’ an audience.

Facebook ads will always decrease in performance over time. Ad fatigue is one factor. The way the algorithm works is another. Facebook will always show your ads to the people in the audience that it believes are most likely to convert first. That means after you run the ads for a while your ads start getting shown to people in your audience who are less likely to convert.

Hi Andrew,

I assume you wrote this for people with smaller budgets as your recommendation of doing an A/B split test by separating each ad into an ad-group ends up to be a logistical nightmare.

Take for example a person running a 7 figure FB budget across 17 nationalities

you have 34 Campaigns (1 Direct response and 1 Re-marketing campaign, not including campaigns for DPA) with an average of 5 to 6 ad-sets (audience targeting) with 3 to 4 ads being tested at any given time per ad-set.

With your methodology above on A/B testing (I get why you say it from a “it gives the most accurate information as your comparing like for like and FB is not automatically calling a winner within that firs 24 hour window). What you propose for a large account is taking 136 ad-sets (34 campaigns * 4 ad-sets) and expanding that out to 544 ad-sets.

To which then later your going to need to collate all the information about which ad-set worked for which market, nationality, language and target market. The compile it with GA data so you can look at bounce rate, time on site and conversion rate + funnel paths.

Based on results I have done when testing this methodology of splitting our ad-sets to test ads vs having all the ads in 1 ad-set and letting Facebook control it (with some manual intervention e.g. turn off the best performer for 1-2 hours so it has to use some of the other ads to get a better cross section).

I have not seen a better result splitting out the ads into seperate ad-sets vs loading all the ads into a single ad-sets and letting new creative try to out-perform the old creative based on a mixture of metrics of checkouts, CPA, CPC, Conversion Rate, Bounce rate and add to cart.

As much as I like to agree with comparing apples to apples using 2 seperate ad-sets with the same targeting from memory with a chat to an enterprise account rep Facebook will still show the ad with the better performance even when the audiences are overlapping instead of giving the ads a fair share of time (Like we would want in a true CRO test).

Have you seen this methodology implemented in a large budget marketing campaign has it worked before? Wondering if it’s something I should revisit again. Interested to actually see some stats of applying the methodology of seperate ad-sets for ads actually out performs the Facebook algorithm consistently in finding the right ad and where Facebook is consistently not picking the right winner.

Josh

Hey Josh,

It definitely changes the logistics, especially for larger budgets. I’ve found the easiest way to manage that is to be really strategic about how I name my ad sets and ads to make the data easier to manipulate once I export it. Same thing with the GA tags.

At the end of the day the goal is to test each ad against each audience to the point where you can collect enough data to achieve statistical significance. It sounds like you’re achieving that using your method of having multiple ads in one ad set and manually turning them on and off, so if that works for you I say keep using that method.

Just out of curiosity, do you find that turning of the top performing ad in the ad set for only 1-2 hours gives the other ads enough time to get meaningful data? I’ve found that time of day and day of week can greatly affect results. For example, with some ad sets/ads I’ll see 50% cheaper leads at certain times of the day. Same thing with day of the week. Do you factor that in when you’re turning ads on/off, or do you find it doesn’t really matter?

That’s interesting about FB showing the ad with better performance even if they are separated into 2 ad sets. Haven’t heard that before or noticed it in my data, but I might try to test it or ask an ad rep about it.

Andrew

Hey Andrew,

Thanks so much for this article. Exactly what I’ve been looking for! By chance do you have an article on which columns to focus on in Facebooks dashboard for reporting revenue?

Shelby

You’re welcome Shelby. Glad to hear you found it helpful.

Unfortunately I don’t have a post like that at the moment, but I’ll keep it in mind as something to create in the future.

Andrew

I thought Facebook changed so now budget and impressions for an adset are spread out equally instead of showing more of the highest performing ad. Or am I incorrect?

Hey Robert,

It seems to do ok with 2 ads in the same ad set, but I still see a very unequal spread if there are any more than that.

Overall the behaviour doesn’t seem to have changed from what I’ve seen. Do you remember where you read that Facebook had made changes? Would be interested to check it out.

Thanks!

Honestly the best resource regarding Facebook ad’s ab testing and Facebook ad in general. Thank you so much for the help!

Thanks Malvin! Glad you found it useful. What was your biggest takeaway from the article?

Awesome post Andrew! What do you recommend for a testing budget for two adsets lets say. I have found out myself that there is a remarkable difference in cpc and cpm when the initial daily budget is different, but the adsets being tested are the same. For example when starting a test with $20 compared with $100 for example. (i guess Facebook wants us to spend more when they see we are willing to pay more). Have you had that?

Glad you enjoyed it Johannes!

I’ve noticed a difference with higher daily budgets in general, doesn’t matter if I start small & scale up or start testing with a $100+ budget.

I think it’s more to do with the way the bidding process works than Facebook making a cash grab. For example, with a small budget Facebook can show your ads to the very best traffic available within your target audience. As your budget increases, Facebook needs to broaden that audience and as a result it needs to start showing your ads to more expensive segments of your target audience and people who are less likely to click / convert.

I agree with you. I have two ads on ads set. That’s keep customer engagement more