While running A/B tests on all your traffic at once is often tempting, it’s best to target mobile and desktop audiences separately.

Here are five reasons why.

Table of contents

#1: Different things work

What ends up winning for desktop audiences, often does not for mobile users. If you bucket all your traffic into a single test, it might end up as “no difference”—while actually mobile was winning big and desktop was performing poorly.

This is something I see every day in my line of work: different things work for desktop and mobile. So while your mobile/responsive website might start out as a small-screen version of your desktop site, you might end up with two very different experiences.

Mindset and use case differences

Desktop and mobile are not only devices, each creates a whole different mindset use cases. People use mobile phones more often on the go—while standing in the Starbucks line and even while waiting at a red light. As such, mobile users often have lower attention spans.

Tablet is more similar to desktop—it’s not really a mobile device. Most tablets are wifi-only: “Only 20 percent of tablets are sold with wireless chipsets. And only half of those devices are initially connected to wireless networks.” So essentially tablets are used at home, while sitting on a couch. Many times users will browser their tablet while watching Netflix or Hulu.

Tablets are similar to mobile when it comes to typing: people don’t like to type on tablets. Not only does it actually cause muscle problems, but typing speeds and accuracy are reduced by 60 percent and 11 percent, respectively.

If your tablet traffic is large enough so you would have an adequate sample size, go for it. If not, bucket tablets together with desktop as more often than not the users respond to the same thing.

#2: Your desktop and mobile traffic volumes are different

If your mobile and desktop traffic are exactly 50/50 split, this doesn’t apply to you—but odds are that the split is not even.

You might think that you can do post-test segmentation: look at the test results across device segments after your test is done. This comes with a potential problem: while your sample size for the whole traffic is enough, it’s not large enough if you look at mobile and desktop segments separately. When that happens, you only know what the result is “on average”, and on average that’s bullshit.

To solve this, you’d need to run the test long enough to have enough sample size for each device category. This has another problem: your test takes a needlessly long time.

Let’s say that you have 3x more desktop traffic than mobile traffic. You run a test, and now have enough sample for the desktop segment. The problem is that you can’t stop the test, and test something else now—because you’d need to wait for the sample size for the mobile segment (if you want to know how the treatment performed for mobile users).

So essentially you are slowing down your testing progress when you don’t have to. When you run tests for different device categories separately, this issue will go away.

#3: Not all mobile traffic is equal

When you combine desktop and mobile traffic together, you stop thinking about the particularities of mobile traffic. But you shouldn’t.

Mobile is not just mobile—there are also significant differences between Android and iPhone users. One thing is purchasing habits—iPhone users spend 4x more money on apps, and a whopping 78% of all purchases done on mobiles are done on iPhones (vs Android). The second difference is the cause of the first one—demographics. There are lots of cheap Android phones available, so more low-income people have Androids. There are way more low-income people than rich people—which is also why Android has 80% market share and rising.

The bottom line for us: people who use Android or iOS are likely to be different. Not everybody is of course—we can’t generalize—but a good portion of the user base is different.

Different strokes for different folks—what works for Android often does not work for iOS, and vice versa. So either target your users by their mobile operating system separately or at least check the performance across operating system segments in your post-test analysis.

#4: You might want to optimize for different outcomes

While mobile internet usage is way up (more Google searches now done on mobiles), people still tend to switch to desktops to complete their transaction. Research on mobiles, (more) purchasing on desktops. According to studies, people switch between devices anywhere between 21 to 27 times an HOUR.

Ecommerce conversion rates for mobiles still lag behind desktops:

Click to enlarge

What does that mean for you?

What it might mean is that perhaps you should stop trying to close the sale during mobile visits, and instead try to get their email – so you could get them back on a desktop to finish the purchase. Email remarketing can be gold here. I’m not saying that’s the way it is in your case, but it’s something I do recommend you test.

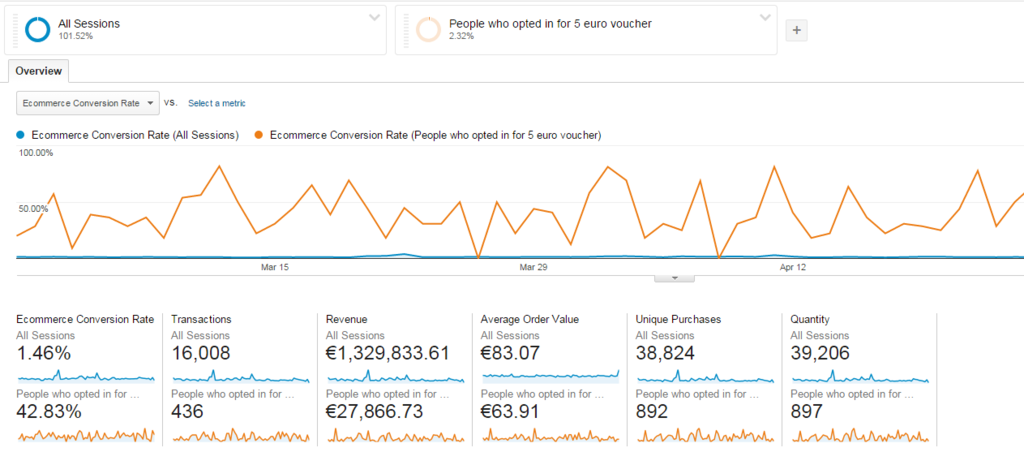

Capturing emails on any site, especially ecommerce, is a great idea no matter what. How to get the email? You can start by offering a discount coupon – it can work wonders. Check out these numbers:

That’s right—the conversion rate for the segment that opted in (via a popup) to get a 5 euro voucher is 42% here. Once they get the coupon – they’re shopping, not merely browsing.

If you test going for email optins on mobiles—and it seems to work for your particular context, then essentially you’re going to be optimizing for different outcomes on different devices. A very good reason to run separate tests across device categories.

#5: You can create more tests faster

The more tests you run, the more money you (can potentially) make. If you set up tests targeting only a single device category, it will take less development and QA time per test, hence you’re able to launch tests faster. Every day has value —and every day without a test regret by default.

Start with the device category that has the most traffic (thus bigger impact on your revenue), followed by other device categories.

Conclusion

Don’t run the same test across all devices. Always separate mobile and desktop tests it can make a world of difference.

It might also be worth testing mobiles on wifi and mobiles on 4G/3G separately as well (ref. Bart Schutz talk at Conversion Conference in LV) as that could indicate quite different physical contexts.

Indeed, definitely a segmentation to pay attention to!

Perfect article, I really like to see differences between Apple and Android users, sometimes it’s very interesting how they differently spend money. Thnx again and more articles like this.

This is definitely true, their behavior can be quite different just like the behavior from different desktop browsers.

This is amazing. Yes, all data points to optimizing individually.

Good, but really, not sure this is about testing per se. It is more an argument that there should be different experiences between mobile and desktop, no? Differentiated Testing is just a subset of that.

You’re absolutely right.

So if right now the website is pretty much the same for both (just responsive or whatever), then testing is a good way to go toward the different experiences.

Yes, assuming you have some capacity to enforce multiple contextual experiences.

So with website-builders such as Webydo (www.webydo.com) would you still suggest this approach even though one of its key features is built-in responsive design?

Of course – responsive design changes nothing

Nice Article!!

Mobile has a completely different user experience than desktop, Visitors on mobile behave differently than visitors on desktop. For example desktop users are more likely to convert on pricing page than mobile because mobile users are more distracted and less prepared to make a purchasing decision while on the move.